Hacking Our Cultural Heritage Datasets

April 28 and 29 was Transparency Camp 12 (TCamp), an unconference to gather journalists, technologists, activists, and others to work on ways to promote and work with openness in government. The 30th was a special hack day on the Voter Information Project. Turns out, I was over my head in that context and couldn't really contribute. So instead I ditched and built an Omeka site to display the data in the catalog of government agency datasets CSV file from Data.gov (full disclosure for those who don't know me--I work on Omeka for the Roy Rosenzweig Center for History and New Media)

Here's why.

Data Sets Are Cultural Heritage Artifacts

If we live in the "information age", or better yet the "data age", then the sets of data that we, as a society, collect and use reflect that fact. They are cultural products of the time. What data was collected (and how, and in what formats), say something about this cultural moment. As such, they deserve to be treated as part of our cultural heritage just as much as artifacts from, say, the "space age". So, using Omeka for to republish dataset makes sense.

"Hacking" Need Not Be Reserved For Techies

I have to say I was a little surprised that I didn't see more of the DIY spirit at

TCamp. Maybe that's because of my expectations from THATCamps. Either way, during one of the sessions the state of Data.gov was discussesd. There was much dissatisfaction with the site, in terms of UI and UX, updating, and more. But I still think it is remarkable that there is so much data there to grab and play with. That situation reminded me of the sagacity of Butthead when he said, "This sucks! Change it!" Putting aside for the moment the issue of data not being updated, if some useful and interesting data is there for the download but packaged in a bad site, I want to do something about it.

I'll call that hacking, even if it doesn't involve touching any code at all. It's taking data, manipulating it, and repurposing it. That's what coders do. But, with the data available, noncoders can also engage in that activity of hacking. I want to send that message to the TCamp crowd to encourage more people to engage in low-tech-level hacking on the data that's there.

What I Did

I grabbed the CSV file (N.B. they say this set is updated daily, so it's the snapshot as of April 30, 2012) and poked around in it a little just to see what was there and what munging I might need or want to do. It's a one-day hackathon, so I didn't want to get too fancy, but it did look like I'd want to do some work to distinguish the datasets listed and the agencies producing them. I created item types in Omeka for "Dataset" and "Agency" (I decided to mostly ignore, for now, the distinction in the data between agency and sub-agency, but did build a field for parent agency), with some associated metadata for each. Then, CSV Import plugin nommed it all in. I created three different imports on the same file, one to map data onto datasets, one to make the agencies, and a third to make agencies out of the sub-agancies.

For the sake of discovery, I was pretty liberal with mapping data to tags. For all three imports, the category and keywords CSV fields were mapped onto both Dublin Core subjects and onto tags.

I broke up the original CSV file into parts to fit into upload size restrictions to my host. After three or four parts, I noticed that the data was corrupted -- the headings no longer matched up with the data in the columns. In the interest of pushing out a proof of concept, I declared "meh" and stopped after those parts. Some data cleanup would probably produce a little over two or two-and-a-half times the amount of data in the site.

An implication of the idea that datasets are cultural heritage artifacts is that there should be some narrative and interpretation built up around them. So, just to demo that idea, I built a simple demo exhibit.

It would also be nice for people to contribute their own stories or information, so I fired up the MyOmeka and Commenting plugins.

Done. Data hacked and repurposed. Notice, I haven't touched a line of code yet. BUT HACKING WAS ACHIEVED!

Next, code hacking to add some niftiness.

One thing that I wish Omeka does better is create relations between items, like between the Dataset and Agency types I'd created. So I built some scripts to first clean up the multiple representations of each Agency that appeared -- you'll notice that each row of the CSV would make a new Agency, thus many duplicates. Plus, in that easy import, the agency for each dataset was recorded as plain text. Once I had removed the duplicates, it was fairly easy to go back through the datasets and change the plain text info about the agency (and subagency) into a link to the record in Omeka. Last, I fired up another script to add links between the parent and child agencies.

UPDATE:Ooops! Looks like the script isn't working right with all the sub-agencies. Might be that only one is surviving the rewrite into a link.

Granted, this came together so quickly because I'm so familiar with Omeka, its plugins, and what they can do. Even still, data can be repurposed into something that invites user feedback and building pretty easily. I think Omeka is good for the job for that philosophical reason of treating datasets as cultural heritage artifacts. But a savvy Drupal person could probably do similarly awesome things without writing code. Not sure, but I suspect that there are tools in Drupal that would get farther before code-writing became necessary. The point is, if there's someone in your organization with some familiarity with any CMS, you're in a great position to start doing things with the data that's available now.

Anything Useful Here?

I think so. Complaints about interface, design, and user experience can certainly be lodged. I just took one of the available Omeka themes without modification beyond what's in the theme configuration. But, as proof of concept, the important point is that we can go in and change it when we want to. In other words, the same data that's on data.gov has been moved into a context that lets us change the context and the interaction to our needs. That's a big step forward.

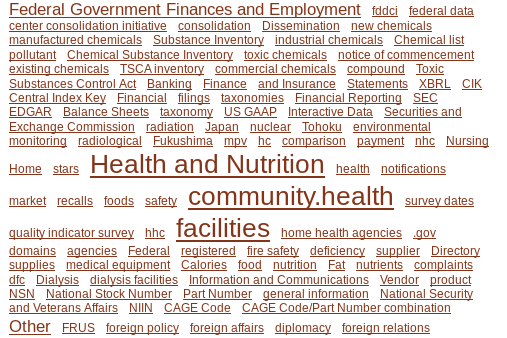

Out of the box, one of the interesting things is the tag cloud.

What Next?

The site is definitely a proof of concept of how we can start hacking the data that's available. Completely missing in this exercise is the really important call to action step. The site is almost completely about information sharing, both by giving a new view on the catalog, and by inviting site users to augment the information being displayed. Given the extraordinary site designs I saw at TCamp, there's certainly room to develop the theme (it's just out-of-the-box Emiglio), and do something really interesting that way. It's also possible that a different set of data could lend itself more to something that could become a call to action. Here's a few possible directions.

- Crowdsourced monitoring of compliance to dataset release

- It would be trivial to build a plugin to mark what datasets are in compliance with their stated release schedule. Something more fancy could record a history of compliance. That monitoring could be crowd-sourced. If we had 100 people agreeing to check on 10 datasets each every quarter, that'd cover things pretty well. And that would help keep pressure on agencies to adhere to their stated goals.

- Patterns of data

- Narratives are great for explaining patterns that we see, and that's what Omeka's exhibits are good for. We could bring together datasets to explain why they are important in aggregate, and develop further directions for research, both journalistic and sociological.

As a proof of concept, I'm not entirely sure how much additional work I'll be doing with the site. If interesting things start to happen there, I'll maintain it as best I can, and if things really get interesting I'll build up feature requests. But if we get to that point, I'll need help. If people want to join the site at a level to build more exhibits, I'll certainly add you in. If you want to be an admin, all the better! My real hope, though, is that others will want to try a similar approach with different sets of data.

"Any medium powerful enough to extend man's reach is powerful enough to topple his world. To get the medium's magic to work for one's aims rather than against them is to attain literacy."

-- Alan Kay, "Computer Software", Scientific American, September 1984

Add comment